Google has started rolling out its Gemini Pro API to developers and organisations along with a range of other AI tools, models, and infrastructure. Developers on Google Studio will now be able to access Gemini Pro API which is also available to enterprises through Google Cloud’s Vertex AI platform.

“We are moving quickly to bring the state-of-the-art capabilities of Gemini to all our services. And you should assume that we’re gonna bring it to every single surface that Google has,” Thomas Kurian, CEO of Google Cloud told indianexpress.com in an interaction.

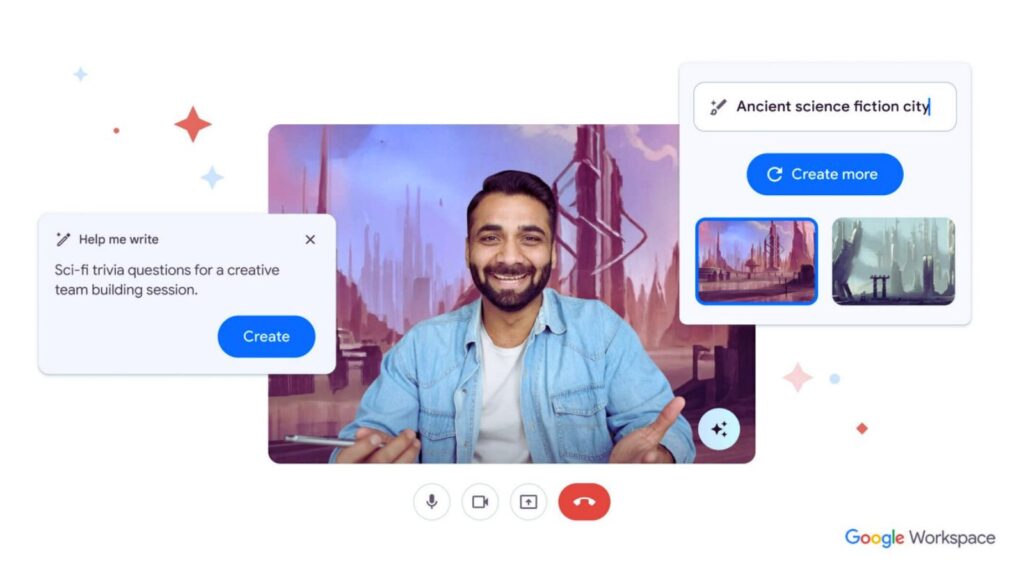

The tech giant has also introduced numerous other models in Vertex AI to help developers and enterprises build applications effortlessly. These include an upgraded Imagen 2 text-to-image diffusion tool, a family of foundation models fine-tuned for the healthcare industry – MedLM (available to Google Cloud customers in the US). Google has also announced the general availability of Duet AI for developers and Duet AI in security operations.

Asked Google how it planned to incorporate user feedback, especially from those using Gemini for information search, brainstorming, and coding to refine and improve AI’s capabilities, Kurian said Google has a highly disciplined process for introducing its models and this includes starting with a preview phase. This preview phase enables the team to collaborate closely with a broad community of developers and consumers, allowing them to incorporate valuable feedback as they evolve the models.

“When you use one of our services and provide feedback, whether it’s a thumbs-up or a thumbs-down on the model’s performance, we have automated systems in place to collect this feedback. This process is referred to as reinforcement learning from human feedback, and it plays a crucial role in fine-tuning our models,” Kurian told indianexpress.com.

Thomas Kurian, CEO, Google Cloud.

Thomas Kurian, CEO, Google Cloud.

The Google Cloud CEO added that these previews are intended to foster close collaborations, be it with individuals or with enterprises in specific industries. This collaboration is essential because different domains often have unique language nuances and requirements. “For instance, legal firms use a distinct writing style compared to medical professionals, and creative writers have their own unique approach. By engaging in previews and gathering feedback, we can work in harmony with users from various domains to ensure our models cater to their specific needs,” Kurian explained.

“We actively engage in hackathons, both as participants and hosts, with developers. This involvement allows us to stay closely connected to user feedback. We closely observe users as they go through our onboarding process, aiming to understand their specific goals and needs. This insight informs the design and development of our tools. We’ve been actively pursuing this approach, and it will remain a key part of our strategy,” added Josh Woodward, VP, Google Labs.

Building with Gemini Pro

While Google is making Gemini Pro available for developers and enterprises to build for their own use cases, the company has said that it will be fine-tuning the model in the coming weeks and months. The first version of Gemini Pro is now accessible via the Gemini API.

According to Google, Gemini Pro outperforms other similar-sized models on research benchmarks. While the latest version comes with a 32K context window for text, future versions are expected to have larger windows. As of now, it is free with limits, however, Google said that it will be competitively priced at a later stage.

Some of the notable features include embeddings, function calling, semantic retrieval and custom knowledge grounding, and chat functionality. Gemini Pro supports 38 languages across over 180 countries and territories. Gemini Pro accepts text as input and generates text as output. Google has also created a dedicated Gemini Pro Vision endpoint that accepts text and imagery as input and text as output for multimodal use cases. SDKs are also available for Gemini Pro with support for Python, JavaScript, Android (Kotlin), Node.js, and Swift.

As of now, developers have free access to both Pro and Pro Vision through Google Studio for up to 60 requests per minute. This makes it ideal for app development needs. Meanwhile, those using Vertex AI can try the same models with the same limits at no cost until general availability in early 2024.

Imagen 2 and MedLM on Vertex AI

Dubbed as the most advanced text-to-image diffusion technology from Google DeepMind, Imagen 2, is now available on Vertex AI. Along with an array of features, Imagen 2 now delivers significantly improved images. Besides, it is capable of generating a wide range of creative logos, emblems, abstract logos, and letter marks for brands, businesses, and products. Google claims that Imagen 2 can deliver results that are a challenge for most text-to-image models and also renders text in multiple languages.

MedLM is a family of foundation models fine-tuned for the healthcare industry. It is now generally available (via allowist) to Google Cloud consumers in the US through Vertex AI. It will also be launched on Model Garden in the coming weeks. It is built upon the Med-PaLM 2 foundation that was launched earlier this year.

DuetAI for Developers and Security Operations

Google has now made DuetAI available for Developers and integrated it into Security Operations. DuetAI, an always-on collaborator on Google Cloud, offers AI-powered code and chat assistance to help users build applications using their favourite code editor and software development tools. With DuetAI in Security Operations, Google Cloud is the first major cloud provider to make generative AI available to defenders in a unified SecOps platform.

Along with the newly introduced capabilities, Google has announced competitive pricing and also expanded its indemnification to help users from concerns related to copyright.

Credit: Indian Express