The history of artificial intelligence has been punctuated by periods of so-called “AI winter,” when the technology seemed to meet a dead end and funding dried up. Each one has been accompanied by proclamations that making machines truly intelligent is just too darned hard for humans to figure out.

Google’s release of Gemini, claimed to be a fundamentally new kind of AI model and the company’s most powerful to date, suggests that a new AI winter isn’t coming anytime soon. In fact, although the 12 months since ChatGPT launched have been a banner year for AI, there is good reason to think that the current AI boom is only getting started.

OpenAI didn’t have high expectations when it launched the “low key research preview” called ChatGPT in November 2022. It was simply a test of a new interface for its text-generating large language models (LLMs). But the chatbot’s ability to do such a wide range of things, from synthesizing essays and poetry to answering coding problems, impressed and unnerved many people and set the tech industry aflame. When OpenAI added its new GPT-4 LLM to ChatGPT, some experts were so freaked out that they begged the company to slow down.

Evidence was already scant that anyone heeded that alarm call. It’s inconceivable now that Google has upped the ante—and also perhaps changed the rules of the game—by announcing Gemini.

Google had already rushed out a direct response to ChatGPT in the form of Bard earlier this year, finally launching LLM chatbot technology that it had developed earlier than OpenAI but chosen to keep private. With Gemini it claims to have opened a new era that goes beyond LLMs primarily anchored to text—potentially setting the stage for a new round of AI products significantly different from those enabled by ChatGPT.

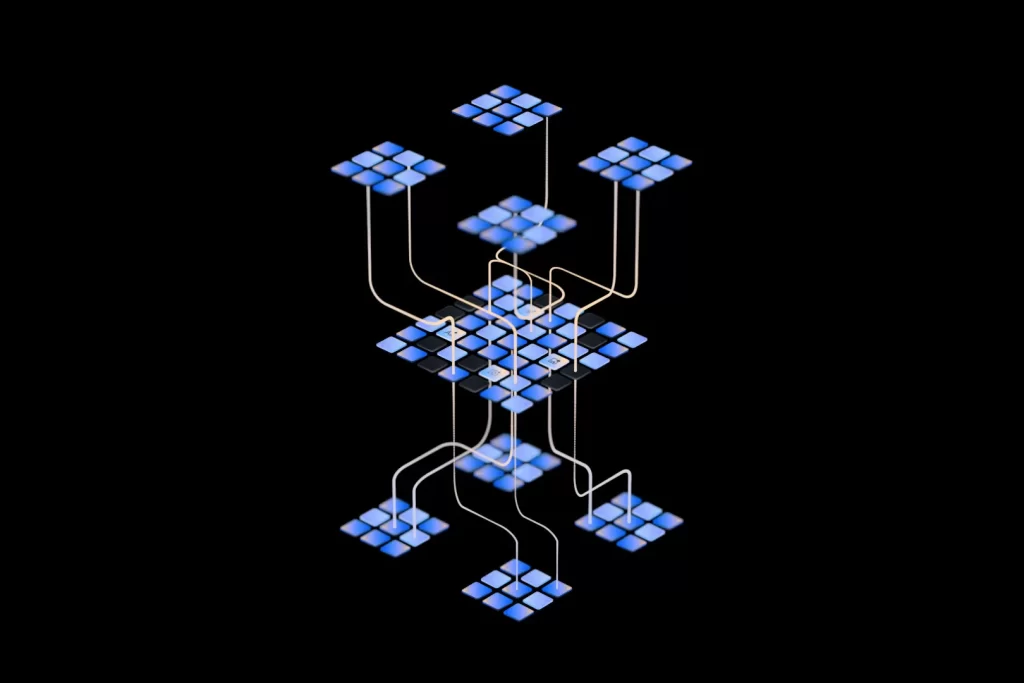

Google calls Gemini a “natively multimodal” model, meaning it can learn from data beyond just text, also slurping up insights from audio, video, and images. ChatGPT shows how AI models can learn an impressive amount about the world if provided enough text. And some AI researchers have argued that simply making language models bigger would increase their capabilities to the point of rivaling those of humans.

But there’s only so much you can learn about physical reality through the filter of text that humans have written about it, and the hard-to-eradicate limitations of LLMs like GPT-4—such as hallucinating information, poor reasoning, and their weird security flaws—seem to suggest that scaling existing technology has its limits.

Ahead of yesterday’s Gemini announcement, WIRED spoke with Demis Hassabis, the executive who led the development of Gemini and whose previous accomplishments include leading the team that developed the superhuman Go-playing bot AlphaGo. He was predictably effusive about Gemini, claiming it introduces new capabilities that will eventually make Google’s products stand out. But Hassabis also said that to deliver AI systems that can understand the world in ways that today’s chatbots can’t, LLMs will need to be combined with other AI techniques.

Hassabis is in an aggressive competition with OpenAI, but the rivals seem to agree that radical new approaches are needed. A mysterious project underway at OpenAI, called Q*, suggests that the company is also exploring ideas that involve doing more than just scaling up systems like GPT-4.

That fits with remarks made in April by OpenAI CEO Sam Altman at MIT, when he made clear that despite the success of ChatGPT, the field of AI needs a big new idea to make significant further progress. “I think we’re at the end of the era where it’s going to be these, like, giant, giant models,” Altman said. “We’ll make them better in other ways.”

Google may have just demonstrated an approach that can go beyond ChatGPT. But perhaps the most notable message from Gemini’s launch is that Google is set on driving toward something more significant than today’s chatbots—just as OpenAI appears to be, too.

Credit: Wired